Network Latency - How It Impacts Your Web Performance

What is network latency

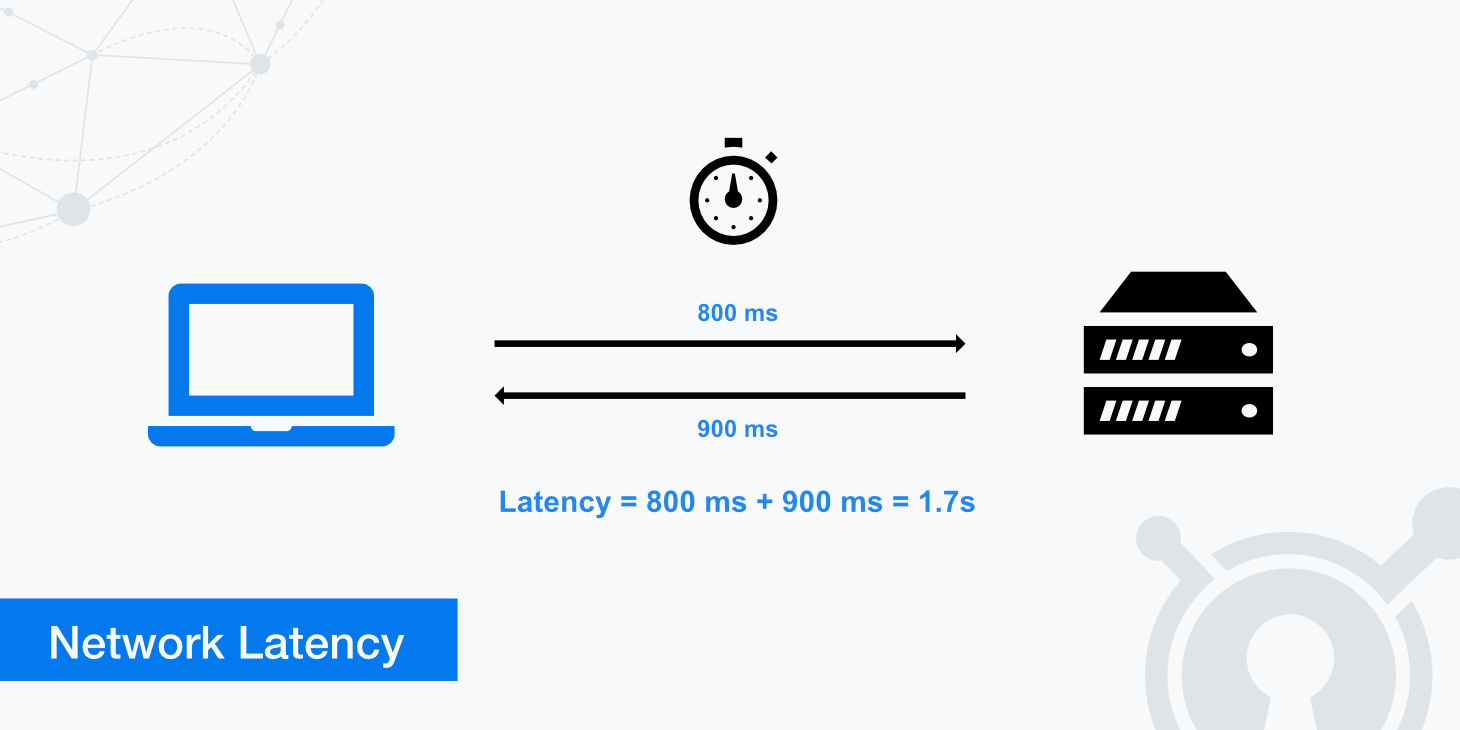

Many people have likely heard the term latency being used before but what is latency exactly? In terms of network latency, this can be defined by the time it takes for a request to travel from the sender to the receiver and for the receiver to process that request. In other words, the round trip time from the browser to the server. It is desired for this time to remain as close to 0 as possible.

Why network latency matters

Latency is especially important when it involves businesses that serve visitors in a specific geographic location. Let's say you have an e-commerce business in Chicago, and 90% of your customers come from the United States. It would definitely benefit your business if your website was hosted on a server in the United States rather than Europe or Australia. This is because there is a big difference between loading a website from near the hosting server or worldwide.

Distance is one of the main reasons for latency, but there may be other factors preventing your website latency from staying low. In the following, we'll go into more detail about the causes of latency below and give you important tools to find out if you've chosen the right location to host your website.

Latency vs bandwidth vs throughput

Although latency, bandwidth, and throughput work together hand-in-hand, they have different meanings. It's easier to visualize how each term works when referencing it to a pipe:

- Bandwidth determines how narrow or wide a pipe is. The thinner it is, the fewer data can be pushed through it at once and vice-versa.

- Latency determines how fast the contents within a pipe can be transferred from the client to the server and back.

- Throughput is the amount of data transferred over a given period.

If the latency in a pipe is low and the bandwidth is also low, the throughput will be inherently low. However, if the latency is low and the bandwidth is high, that will allow for greater throughput and a more efficient connection. Ultimately, latency creates bottlenecks within the network, thus reducing the amount of data transferred over a while.

Causes of network latency

What latency is has been answered; now, where does latency come from? Four leading causes can affect network latency times. These include the following:

- Transmission mediums such as WAN or fiber optic cables have limitations and can affect latency simply due to their nature.

- Propagation is the time it takes for a packet to travel from one source to another (at the speed of light).

- Routers take time to analyze the header information of a packet and, in some cases, add additional information. Each hop a packet takes from router to router increases the latency time.

- Storage delays can occur when a packet is stored or accessed, resulting in a delay caused by intermediate devices like switches and bridges.

Ways to reduce latency

Latency can be reduced using a few different techniques as described below. Reducing the amount of server latency will help load your web resources faster, thus improving the overall page load time for your visitors.

- Using a CDN: As we have mentioned initially, the distance between the clients making a request and the servers responding to that request plays a key role. Using a CDN (contend delivery network) helps bring resources closer to the user by caching them in multiple locations worldwide. Once those resources are cached, a user's request only needs to travel to the closest Point of Presence to retrieve that data instead of going back to the origin server each time.

- HTTP/2: The use of the ever prevalent HTTP/2 is another excellent way to help minimize latency. HTTP/2 helps reduce server latency by minimizing the number of round trips from the sender to the receiver and parallelized transfers. KeyCDN proudly offers HTTP/2 support to customers across all of our edge servers.

- Fewer external HTTP requests: Reducing the number of HTTP requests applies to images and other external resources such as CSS or JS files. Suppose you are referencing information from a server other than your own. In that case, you are making an external HTTP request which can significantly increase website latency based on the speed and quality of the third-party server.

- Using prefetching methods: Prefetching web resources doesn't necessarily reduce the amount of latency per se. However, it improves your website's perceived performance. With prefetching implemented, latency-intensive processes occur in the background when the user is browsing a particular page. Therefore, jobs such as DNS lookups have already taken place when they click on a subsequent page, thus loading the page faster.

- Browser caching: Another type of caching that can reduce latency is browser caching. Browsers will cache certain website resources locally to help improve latency times and decrease the number of requests back to the server. Read more about browser caching and the various directives in our Cache-Control article.

Other types of latency

Latency occurs in various environments, including audio, networks, operations, etc. The following describes two additional scenarios where latency is also prevalent.

Fibre optic latency

Latency in the case of data transfer through fibre optic cables can't be fully explained without first discussing the speed of light and how it relates to latency. Based on the speed of light alone (299,792,458 meters/second), there is a latency of 3.33 microseconds (0.000001 of a second) for every kilometer of path covered. Light travels slower in a cable which means the latency of light traveling in a fibre optic cable is around 4.9 microseconds per kilometer.

Depending on how far a packet must travel, the latency can quickly add up. Cable imperfections can also degrade the connection and increase the latency incurred by a fibre optic cable.

Audio latency

This form of latency is the time difference between a sound being created and heard. The speed of sound plays a role in this form of latency which can vary based on the environment it travels through, e.g. solids vs liquids. In technology, audio latency can occur from various sources, including analog to digital conversion, signal processing, hardware / software used, etc.

How to test network latency?

Network latency can be tested using Ping, Traceroute, or MTR. These tools can be used to determine specific latencies. While ping can be used to determine if a particular IP is reachable, the traceroute command can track the path that packets take to reach the host. It shows you how many hops it takes to reach the host and how long it takes between each hop. This allows you to diagnose possible bottlenecks in the network. MTR (also known as My Traceroute) is essentially a combination of Ping and Traceroute and is the most detailed.

With MTR, the user can generate a report that lists every hop in a network it takes for a packet to get from point A to point B. The report includes details such as loss ratio, average latency, etc.

Curious and now want to determine the latency relevant to you?

Use our free traceroute test tool, which in its uniqueness, allows you to display the hops required for a particular domain from 10 different locations.

You can also access our free ping test tool, which allows you to test different sites simultaneously. A faster ping means a more responsive connection.

We recommend you read our traceroute command article, where we go into more depth about MTR and traceroute. The article will also help you properly analyze your tests' results.

How to measure network latency?

Essentially, latency is measured using one of two methods:

- Round trip time (RTT)

- Time to first byte (TTFB)

The round trip time can be measured using the methods above and involves measuring the time it takes between when a client sends a request to the server and when it gets it back. On the other hand, the TTFB measures the time it takes between when a client sends a request to a server and when it receives its first byte of data. You can use our performance test tool to measure the TTFB of any asset across our network of 10 test locations.

Throughput calculator

If you're curious about how latency affects throughput in a hypothetical situation, you can use a throughput calculator. Simply define the application, latency value, distance, etc. Once complete, look at the "unoptimized" bar to estimate your expected max throughput.

Summary

Hopefully, this article has helped answer what latency is and provided readers with a better understanding of what causes it. Latency is an inevitable part of today's networking ecosystem and is something we can minimize but not eliminate. However, the suggestions mentioned above are essential steps to take in reducing your website's latency and helping to improve page load times for your users. After all, in today's internet age, the importance of website speed comes down to milliseconds and can be worth millions of dollars in gained or lost profits.